Generative AI has profoundly transformed the way we **create**, **imagine**, and **interact** with digital content. With the rapid evolution of AI models, their increased capability often comes at the cost of higher VRAM requirements. For instance, the **Stable Diffusion 3.5 Large model** demands over **18GB of VRAM**, making it challenging for many systems.

To alleviate these constraints, NVIDIA has partnered with Stability AI to apply **quantization** to the model. By removing noncritical layers or running them at lower precision, models can now operate more efficiently. Both the **NVIDIA GeForce RTX 40 Series** and the Ada Lovelace generation of NVIDIA RTX PRO GPUs support **FP8 quantization**, greatly enhancing performance while also accommodating more users. Additionally, the latest-generation **NVIDIA Blackwell GPUs** introduce support for **FP4**, further broadening the scope for optimization.

RTX-Accelerated AI: A Game Changer

Thanks to the collaboration between **NVIDIA** and **Stability AI**, **Stable Diffusion 3.5**—one of the globe’s most acclaimed AI image models—has experienced a significant performance uplift. By employing **TensorRT acceleration** and quantization, users can now generate and edit images with **remarkable speed** and **efficiency** on **NVIDIA RTX GPUs**.

By quantizing to **FP8**, the VRAM requirement for the **SD3.5 Large model** has plummeted by **40%**, now utilizing just **11GB** of VRAM. This enhancement allows up to **five GeForce RTX 50 Series GPUs** to successfully run the model from memory. Moreover, both the SD3.5 Large and Medium models have been meticulously optimized using **TensorRT**, an AI backend that takes full advantage of **Tensor Cores**.

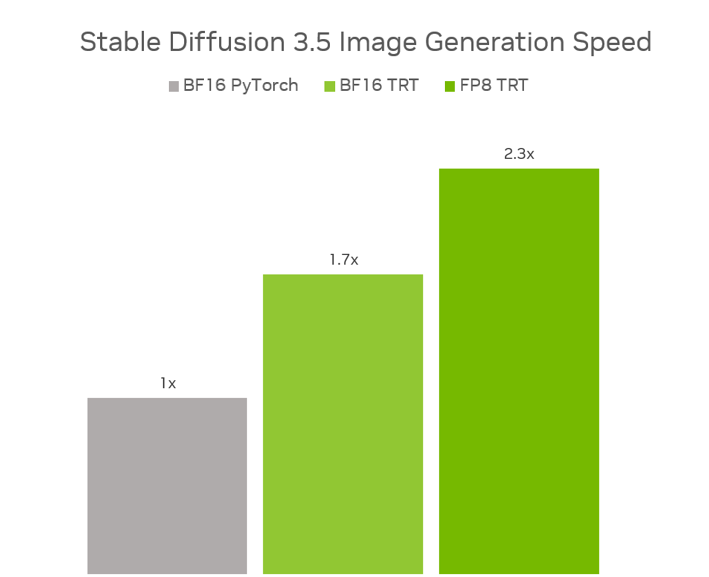

The results are impressive: **FP8 TensorRT** delivers a **2.3x performance boost** for **SD3.5 Large** compared to the original models in **BF16 PyTorch**, while only requiring **40% less memory**. For the **SD3.5 Medium**, **BF16 TensorRT** provides a **1.7x performance increase**, showcasing the tremendous efficiency of these advancements.

You can find the optimized models available on **[Hugging Face’s Stability AI page](https://huggingface.co/stabilityai)**. Furthermore, NVIDIA and Stability AI are set to release **SD3.5** as an **[NVIDIA NIM microservice](https://www.nvidia.com/en-us/ai-data-science/products/nim-microservices/)**. This development promises to simplify access and deployment of the model across a myriad of applications, with an expected release in July.

The Launch of TensorRT for RTX SDK

At a recent Microsoft Build event, NVIDIA introduced **TensorRT for RTX**, now available as a standalone SDK for developers. This version stands out as part of the new **[Windows ML](https://learn.microsoft.com/en-us/windows/ai/new-windows-ml/overview)** framework, currently in preview.

Previously, developers were burdened with the painstaking task of pre-generating and packaging TensorRT engines for various GPU classes. However, with the latest iteration of TensorRT, a generic TensorRT engine can now be created optimized on-device in mere seconds. This **Just-In-Time (JIT) compilation** process can seamlessly occur in the background during installation or upon first use.

The newly streamlined SDK is **8x smaller** and can be easily integrated through **Windows ML**—Microsoft’s cutting-edge AI inference backend. Developers can access the new SDK from the **[NVIDIA Developer page](https://developer.nvidia.com/blog/run-high-performance-ai-applications-with-nvidia-tensorrt-for-rtx/)** or test it within the Windows ML preview.

For further insights, check out **[NVIDIA’s technical blog](https://developer.nvidia.com/blog/nvidia-tensorrt-for-rtx-introduces-an-optimized-inference-ai-library-on-windows/)** and the **[Microsoft Build recap](https://blogs.nvidia.com/blog/rtx-ai-garage-computex-microsoft-build/)**.

Join NVIDIA at GTC Paris

Don’t miss NVIDIA’s presence at the **[GTC Paris](https://vivatechnology.com/)** event—Europe’s largest startup and tech gathering. NVIDIA founder and CEO **Jensen Huang** captivated the audience with insights on cloud AI infrastructure, **[agentic AI](https://blogs.nvidia.com/blog/what-is-agentic-ai/)**, and **[physical AI](https://www.nvidia.com/en-us/glossary/generative-physical-ai/)**. Be sure to catch the **[replay](https://www.nvidia.com/en-eu/gtc/)** of his keynote address.

GTC Paris continues through **June 12**, featuring hands-on demos and sessions led by industry experts. Whether attending in person or joining remotely, there’s still a wealth of knowledge to explore at the event—**[check out the session catalog](https://www.nvidia.com/en-eu/gtc/session-catalog/?tab.allsessions=1700692987788001F1cG#/)** for more information.

Each week, the RTX AI Garage blog series delves into community-driven AI innovations, providing insights for those keen to learn about NVIDIA NIM microservices and AI Blueprints, as well as building AI agents, creative workflows, digital humans, and productivity apps on AI PCs and workstations.

Stay connected with NVIDIA AI PC on Facebook, Instagram, TikTok, and X—and subscribe to the RTX AI PC newsletter to stay updated.

Follow NVIDIA Workstation on LinkedIn and X.

See notice regarding software product information.