How Public Feeds Expose Sensitive Data: A Deep Dive into Meta AI’s Privacy Concerns

Key Takeaways

Default Isn’t Always Private: Despite user settings, Meta AI chats can unintentionally surface publicly due to confusing sharing controls and multi-step opt-in processes.

Sensitive Data at Risk: Individuals have inadvertently shared trust-breaking insights, like medical histories, legal disputes, and even tax evasion strategies, across a publicly accessible platform.

Identity Linkage: Chats conducted while logged into Facebook, Instagram, or WhatsApp can easily be traced back to user profiles, amplifying privacy and security dangers.

Inadequate User Guidance: Meta’s in-app alerts and FAQ links fall short of adequately informing users about when their conversations might go public or how they could be utilized for AI training.

- Regulatory Gaps: This situation highlights the pressing need for improved AI privacy standards to resemble existing data protection laws, emphasizing default privacy settings, explicit user consent, and clear UX protections.

The Unseen Dangers of Sharing: A Closer Look at Meta AI

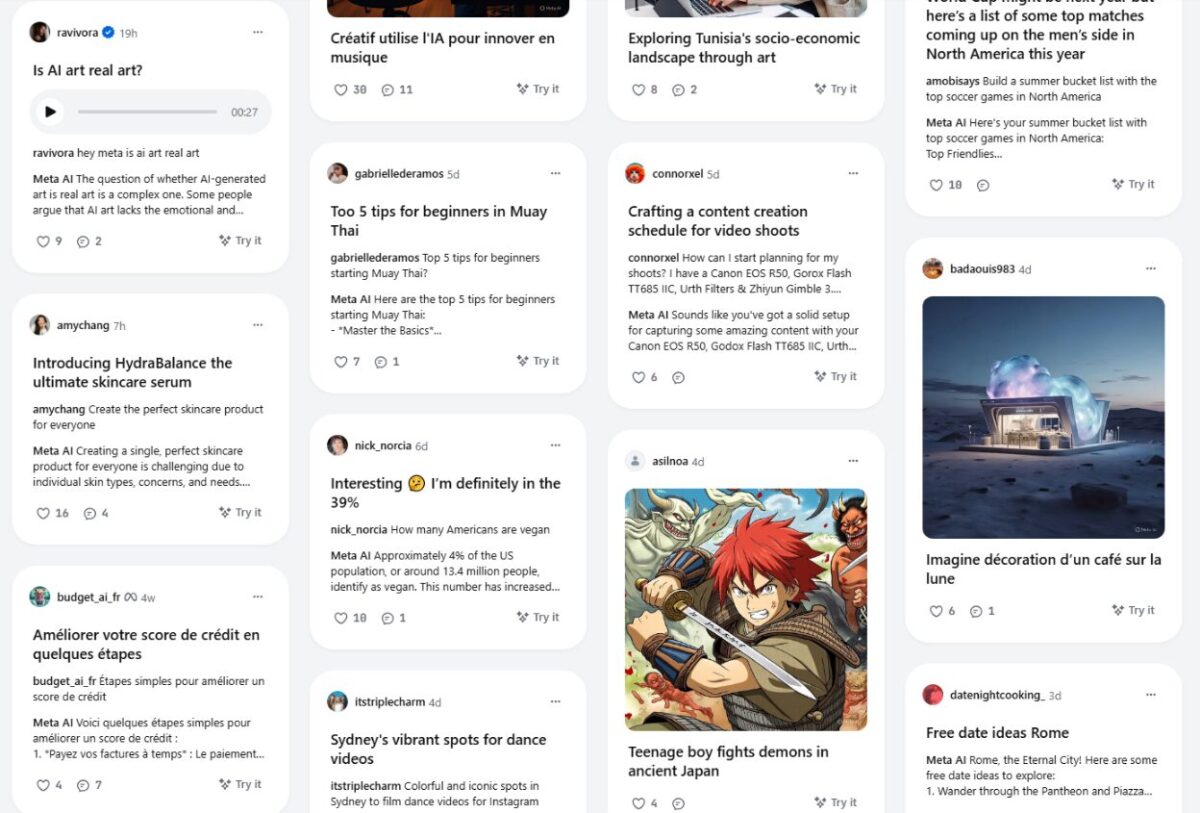

With users unintentionally sharing highly sensitive medical, legal, and personal dialogues with a global audience, the urgency for examining Meta AI’s Discover feed has never been greater.

Meta AI entered the scene promising a seamless conversational assistant integrated into platforms like Facebook, Instagram, and WhatsApp. Yet, many users are shocked to find that hitting the “Share” button in their AI chat doesn’t merely send messages to friends—it broadcasts personal conversations live to a public “Discover” feed.

From revealing tax-evasion strategies to sharing complex medical concerns, these intimate exchanges are now open for everyone to view, oftentimes without the original posters even realizing the implications. This article explores the design flaws, real-world impacts, and privacy implications of Meta’s public chat feed, offering a roadmap for users and regulators to reclaim their privacy.

Design Flaws & User Confusion

Meta AI’s sharing workflow appears simple on the surface. After composing a prompt, users simply tap a Share icon and publish. However, nowhere in the interface is it clearly indicated that this action broadcasts conversations globally. Privacy experts caution that such ambiguous design can lead to unintended disclosures.

Opaque “Share” Labeling

Unlike terms like “Save” or “Export,” the “Share” label implies a private exchange with friends or other applications—not public dissemination.

Multi-Step Confirmation

Even after previewing a post, there are no in-app warnings indicating that the Discover feed is visible to both logged-in and logged-out users.

Conflated Defaults

While Meta AI chats are private by standard, users who remain logged into any Meta service on the same device risk accidentally posting private conversations.

Privacy expert Rachel Tobac describes this as “a UX failure that weaponizes convenience,” while the Electronic Privacy Information Center labels it “incredibly concerning” due to the sensitive nature of some shared content.

Real-World Exposure: What’s at Stake?

A quick glance at the Discover feed uncovers conversations intended for nobody but the individual:

- Medical Queries: Users recount post-surgery symptoms and mental health struggles.

- Legal Advice: Individuals air entire discussions regarding job-termination arbitrations and draft eviction notices.

- Personal Confessions: From affairs to financial anxieties, private issues are broadcast alongside visible public profiles.

- Illicit Planning: Reports highlight conversations about tax evasion and fraudulent schemes—disclosures that may have serious consequences.

These instances reveal the unsettling truth: intimate matters, once confined to one-on-one conversations, are now archived and searchable by anyone.

Profile Linkage & Cross-Platform Risks

Engaging with Meta AI while logged into Facebook, Instagram, or WhatsApp exposes users to even greater risks:

Logged-In Defaults: Meta AI inherits your logged-in state, meaning a simple query can be directly linked to your social profile.

Discover Feed Access: Shared chats can be viewed by both logged-in and logged-out users, increasing the potential for data leaks beyond one’s immediate network.

- Reputation & Safety: Personal information exposed can lead to doxxing, social engineering, and targeted harassment, all of which are intensified by the existing data shared via social accounts.

Privacy advocates emphasize that connecting AI chats to social identities without clear consent violates fundamental expectations regarding private versus public communication.

Meta’s Response & User Remedies

In their defense, Meta maintains that chats remain private unless users actively choose to share. A spokesperson has pointed to a multi-step sharing process and directed users to their Privacy Center. However, there are no real-time warnings about the public nature of the Discover feed.

To safeguard your data, consider these actions:

Disable Sharing in the App: Set your prompts to “Only Me” in Meta AI’s settings.

Log Out Before You Chat: If you must use Meta AI, do so in a logged-out browser or incognito mode.

Opt Out of AI Training: Navigate to Settings → Privacy Center → AI at Meta to submit a request to prevent your conversations from being used for model training.

- Delete Past Prompts: Use commands like

/reset-aiin Messenger or Instagram to erase your AI chat history.

While these steps provide temporary reprieve, they unfairly shift the burden onto users to decipher a convoluted interface.

Regulatory and Ethical Implications

Current data protection laws—like GDPR in Europe and CCPA in California—enforce clear user consent and transparent data use. The public nature of Meta AI’s Discover feed raises significant violations of these principles through:

Lacking Explicit Consent: Users aren’t adequately informed that their chats will be made public.

Obscuring Data Usage: Meta’s chatbot acknowledges that conversations may help train models, yet fails to explain how shared chats differ from private ones.

- Insufficient Controls: The absence of proactive alerts or default privacy nudges contradicts emerging frameworks for AI governance like the EU’s proposed AI Act.

Regulators must clarify that any AI platform offering public sharing needs to ensure explicit, contextual consent for each sensitive data point—no hidden settings or after-the-fact opt-outs.

Designing AI with Privacy in Mind

Meta AI’s exposure of personal chats serves as a critical lesson in UX-driven privacy failures. Trust in AI rests on the solid foundation of clear boundaries between private inquiries and public broadcasts. To move forward, Meta needs to rethink its sharing workflow to incorporate explicit warnings, default to privacy by design, and provide simple options for removing content.

Only then can users explore the transformative promises of AI without the fear that their deepest confessions will become tomorrow’s headlines.

About the Author

Nadica Naceva

Based at Influencer Marketing Hub, Nadica Naceva writes, edits, and manages a spectrum of content, ensuring it resonates authentically with readers. When not deep into drafts, she teaches others to discern quality writing from fluff.