Responsible AI as a Business Necessity: Understanding the Forces Driving Market Adoption

In the ongoing dialogue surrounding artificial intelligence (AI), discussions about ethical principles, regulatory debates, and philosophical considerations often dominate the narrative. However, these conversations often miss a crucial audience: the business and technology leaders who are instrumental in AI implementation. To ensure AI ethics resonates with these decision-makers, it’s essential to reframe the discussion—shifting the focus from mere moral imperatives to the undeniable strategic business advantages that responsible AI offers. This approach not only enhances resilience but also mitigates operational risks and safeguards brand reputation.

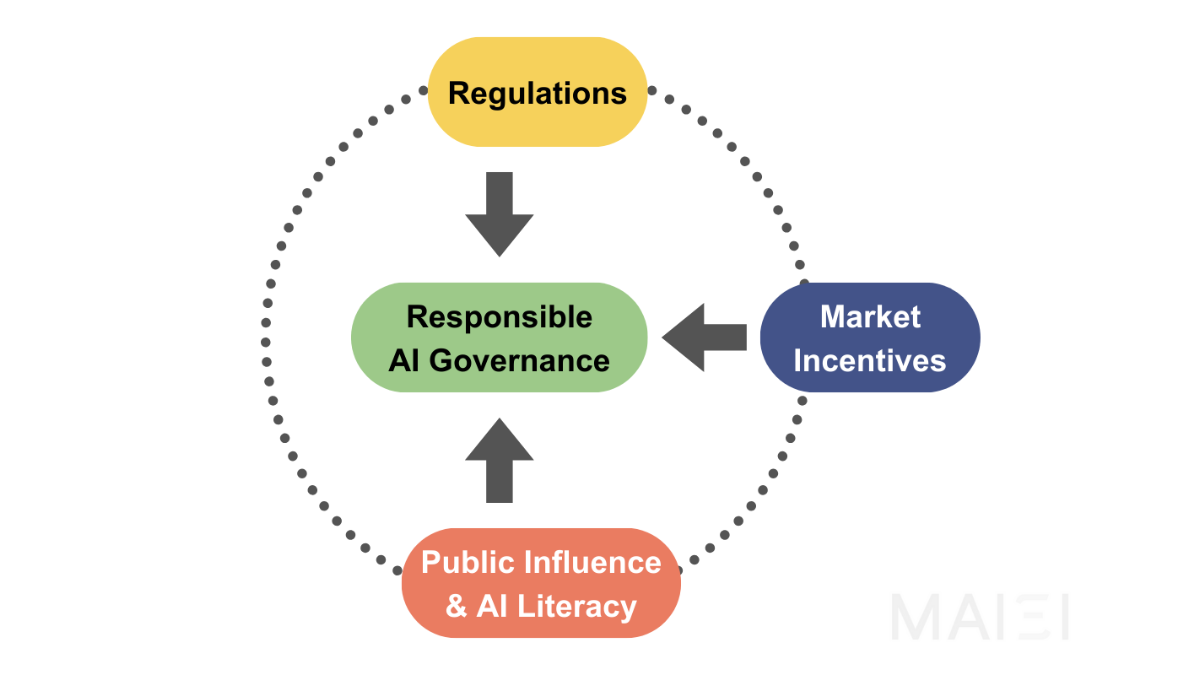

This article explores three pivotal forces shaping the adoption of AI governance: top-down regulation, market pressure, and bottom-up public influence. As these elements converge, companies will increasingly view responsible AI not just as an ethical ideal, but as an essential business requirement, ultimately fostering a landscape where corporate goals align with societal interests.

Figure 1: Converging forces driving responsible AI governance adoption.

The Top-Down Regulatory Landscape: Setting the Rules

Across the globe, AI regulatory efforts have largely adopted risk-tiered approaches to governance. For instance, the EU AI Act classifies AI applications based on their associated risks, imposing stricter requirements on high-risk systems while banning harmful uses entirely. Beyond legal obligations, standards like ISO 42001 provide essential benchmarks for AI risk management. Moreover, voluntary frameworks such as the NIST AI Risk Management Framework offer guidelines for organizations eager to adopt responsible AI practices.

However, navigating the complexities of EU regulatory compliance can be especially challenging for startups and small to medium-sized enterprises (SMEs). Compliance is not optional for any company operating across borders. Take American AI firms serving European clients; they must abide by the EU AI Act just as multinational financial entities must adjust to varied regulatory landscapes.

Case Study: Microsoft provides a prime example of proactive alignment with evolving regulations. The company has adapted its AI development principles to meet new legal requirements, such as those set forth in the EU AI Act. In a climate characterized by geopolitical volatility and digital sovereignty, this strategy allows Microsoft to lessen cross-border compliance risks while upholding customer trust across jurisdictions. Consequently, they have emerged as a reliable provider in tightly regulated sectors like healthcare and finance, where AI adoption hinges on compliance assurances.

The Middle Layer: Market Forces Driving Responsible AI Adoption

While regulatory frameworks apply pressure from above, market forces will catalyze an internal shift toward responsible AI practices. Companies that embed risk mitigation strategies into their core operations can gain a competitive edge in three essential dimensions:

1. Risk Management as a Business Enabler

Deploying AI systems brings various operational, reputational, and regulatory risks that must be vigilantly managed and mitigated. Organizations employing automated risk management tools for monitoring often see enhanced operational efficiency and increased resilience. According to the April 2024 RAND report titled “The Root Causes of Failure for Artificial Intelligence Projects and How They Can Succeed,” inadequate infrastructure investment and underdeveloped risk management practices significantly contribute to AI project failures. Thus, adopting robust AI risk management systems not only minimizes failure rates but also facilitates faster and more reliable AI system deployments.

Financial institutions exemplify this trend. As they transition from traditional settlement cycles (T+2 or T+1) to real-time (T+0) blockchain transactions, their risk management teams are increasingly adopting dynamic frameworks to ensure resilience to rapid changes. The emergence of tokenized assets and atomic settlements introduces ongoing, real-time risk considerations, necessitating a 24/7 monitoring approach, which institutions like Moody’s Ratings are currently assessing for broader implementation.

2. Turning Compliance into a Competitive Advantage: The Trust Factor

For AI companies, market adoption is critical; organizations implementing AI solutions seek internal buy-in to optimize their operations. In both situations, trust is paramount. Companies that integrate responsible AI principles into their business frameworks distinguish themselves as trustworthy providers, gaining advantages in procurement processes where ethical considerations are playing an increasingly crucial role.

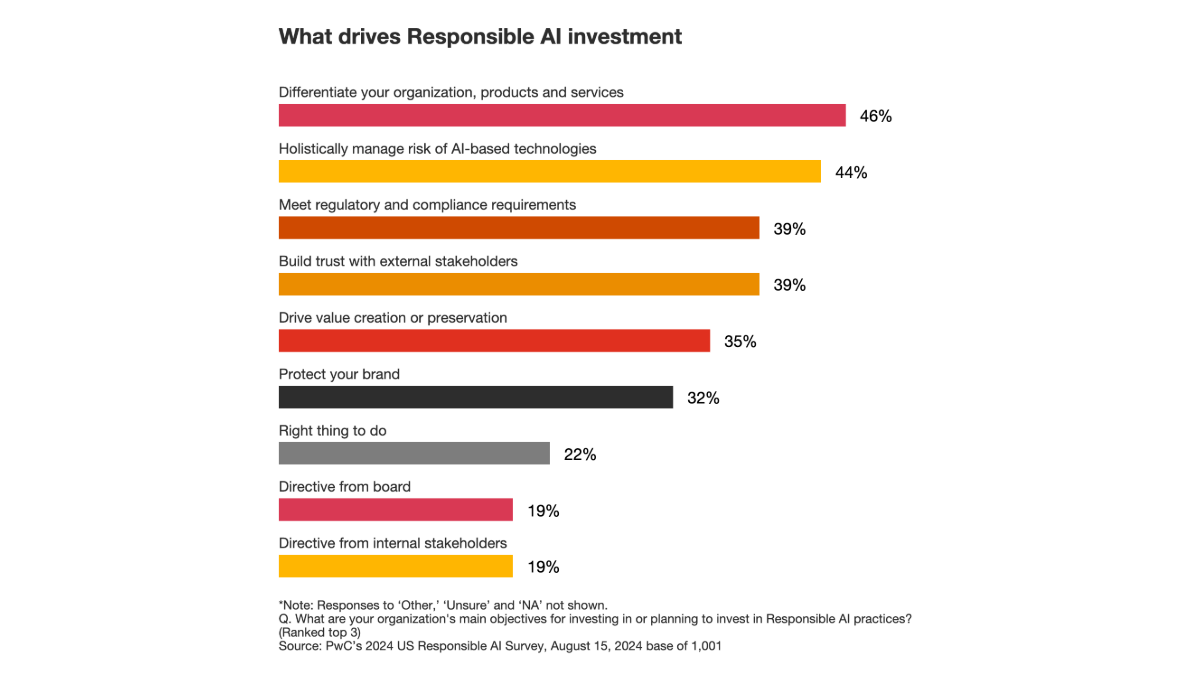

A report from PwC’s 2024 US Responsible AI Survey found that 46% of executives view responsible AI as a significant objective for achieving competitive advantage, closely followed by risk management at 44%.

Figure 2: A graph depicting the drivers of responsible AI investment.

3. Public Stakeholder Engagement as a Growth Strategy

The stakeholder spectrum doesn’t stop at regulatory bodies; it encompasses customers, employees, investors, and communities affected by AI. Engaging diverse perspectives throughout the AI lifecycle— from design and development to deployment and decommissioning—yields invaluable insights that enhance product-market fit while mitigating potential risks.

By implementing structured engagement processes with stakeholders, organizations gain dual benefits: they create AI solutions that align with user needs and build trust through transparency. This trust fosters customer loyalty, employee engagement, and investor confidence, all vital components for sustainable business growth.

The Partnership on AI’s 2025 Guidance for Inclusive AI underscores the importance of engaging the public:

“When companies actively involve the public—whether users, advocacy groups, or impacted communities—they foster a sense of shared ownership over the technology that’s being developed. This trust can translate into stronger consumer relationships, greater public confidence, and ultimately, broader adoption of AI products.”

The Bottom-Up Push: Public Influence and AI Literacy

Public awareness and AI literacy initiatives significantly shape expectations around governance. Organizations like the Montreal AI Ethics Institute and All Tech is Human equip citizens, policymakers, and businesses with the tools to critically assess AI systems and hold developers accountable. As public understanding deepens, consumer choices and advocacy increasingly reward organizations that practice responsible AI while penalizing those that operate with inadequate safeguards.

This grassroots movement fosters a dynamic feedback loop between civil society and industry. Companies that actively engage with public concerns and transparently share their responsible AI initiatives not only mitigate reputational risks but also position themselves as leaders in a trust-driven economy.

Case Study: As mentioned earlier, the Partnership on AI’s Guidance for Inclusive AI emphasizes the necessity of consulting with workers and labor organizations prior to deploying AI-driven automation. Engaging early helps to uncover hidden risks to job security, worker rights, and overall well-being—issues that otherwise might surface only after negative impacts occur.

Moving Beyond Voluntary Codes: A Pragmatic Approach to AI Risk Management

For years, discussions about AI ethics revolved around voluntary principles and non-binding guidelines. However, as AI technologies become increasingly crucial in sectors like healthcare, finance, and national security, organizations can no longer afford to rely solely on abstract ethical commitments. The future of AI governance will hinge on three key developments:

- Sector-Specific Risk Frameworks that acknowledge the unique challenges associated with AI deployment. Organizations that gain a grasp on governance tailored to their specific use cases will secure a competitive edge in procurement processes.

- Automated Risk Monitoring and Assessment Systems capable of continuous evaluation across various risk levels. Such systems will empower organizations to scale AI governance efficiently across expansive enterprises without imposing excessive compliance burdens.

- Market-Driven Certification Programs that signal responsible AI practices to customers, partners, and regulators. Just as cybersecurity certifications have become essential credentials, so too will these certifications be crucial for AI providers aiming to capture credibility in the marketplace.

The transformation of cybersecurity from a mere IT issue to a focal enterprise strategy serves as an apt analogy. Two decades ago, cybersecurity was often an afterthought; today, it is a cornerstone of business strategy. AI governance is on a similar path, evolving from a merely ethical consideration into a fundamental business function.

Conclusion: Responsible AI as a Market-Driven Imperative

The responsible AI agenda must align with market realities. As global competition in AI intensifies, organizations that effectively manage AI risks while nurturing public trust will emerge as frontrunners. These companies will not only adeptly navigate complex regulatory landscapes but will also command customer loyalty and investor confidence in an increasingly AI-driven economy.

Looking ahead, we envision a future in which standardized AI governance frameworks balance innovation with accountability, fostering an ecosystem where responsible AI becomes the norm rather than the exception. Businesses that recognize this shift early and adapt accordingly will be poised for success in a landscape where ethical considerations and business success are intrinsically intertwined.